网站首页 文章专栏 llama本地部署

一、安装ollama

1、下载ollama工具 (https://github.com/ollama/ollama/blob/main/docs/linux.md)

curl -L https://ollama.com/download/ollama-linux-amd64.tgz -o ollama-linux-amd64.tgz

sudo tar -C /usr -xzf ollama-linux-amd64.tgz

2、编写service文件

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=$PATH"

#Environment="OLLAMA_HOST=0.0.0.0:8090"

Environment="OLLAMA_HOST=0.0.0.0:11434"

[Install]

WantedBy=default.target

ps:这里的0.0.0.0 配置为监听所有ip,127后只能本地调用。

3、启动ollam

systemctl start ollam

二、部署模型

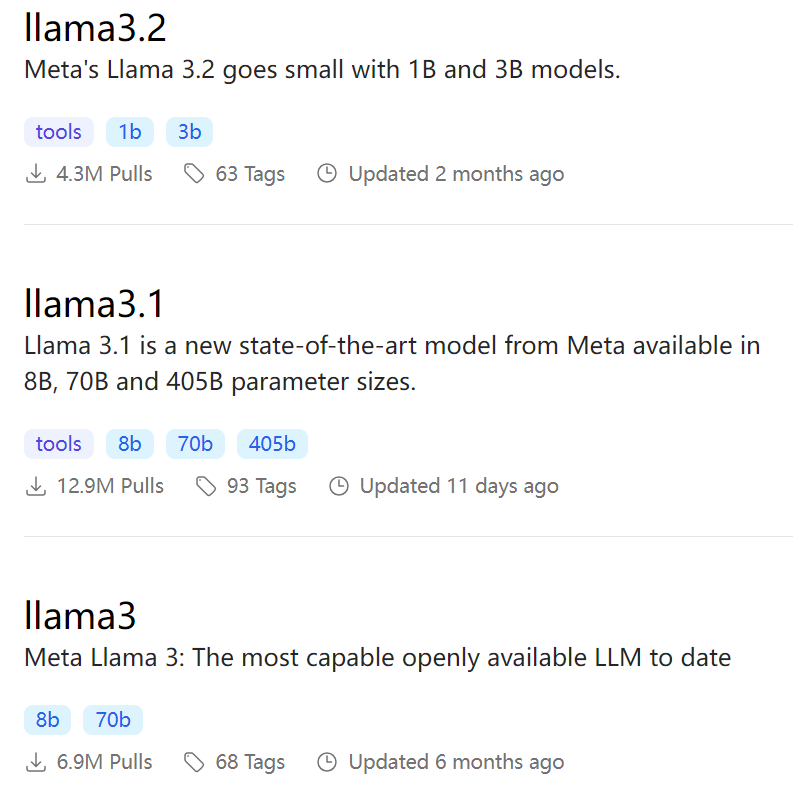

1、拉取模型(这里选取3.1,8b), 参考:https://ollama.com/library

ollama pull llama3:8b

2、启动模型

ollama run llama3:8b

3、本地调用模型,查看返回数据格式

curl -X POST http://localhost:11434/api/generate -H "Content-Type: application/json" -d '{"model": "llama3:8b", "prompt": "Why is the sky blue?", "stream": true}'

ps:H,header;model,模型名称;promot,提示词;stream,是否流式输出。

{"model":"llama3:8b","created_at":"2024-12-12T01:43:25.116545895Z","response":" ","done":false}

查看返回数据格式,我们只需要解析 response(内容) 和 done(是否完成)

三、编写服务端、客户端(采用websoket推送的方式)

1、服务端,用gin启一个websocket,程序代码如下

package main

import (

"bufio"

"bytes"

"encoding/json"

"log"

"net/http"

"strings"

"time"

"github.com/gin-gonic/gin"

"github.com/gorilla/websocket"

)

// LLaMARequest represents the request to the LLaMA model

type LLaMARequest struct {

Model string `json:"model"`

Prompt string `json:"prompt"`

Stream bool `json:"stream"`

}

var upgrader = websocket.Upgrader{

HandshakeTimeout: 100*time.Second,

CheckOrigin: func(r *http.Request) bool {

return true // 允许所有跨域连接

},

}

// llama3:8b 返回的数据结构

// {"model":"llama3:8b","created_at":"2024-12-11T07:09:30.62313519Z","response":"What","done":false}

func main() {

r := gin.Default()

// Serve the chat page

r.LoadHTMLGlob("templates/*")

r.GET("/", func(c *gin.Context) {

c.HTML(http.StatusOK, "index.html", nil)

})

// WebSocket endpoint for streaming

r.GET("/ws", func(c *gin.Context) {

conn, err := upgrader.Upgrade(c.Writer, c.Request, nil)

if err != nil {

log.Println("Failed to upgrade connection:", err)

return

}

defer conn.Close()

// 接收前端发送的 Prompt 消息,(c->s),websocket为双向通信,SSE单向通信

_, promptMsg, err := conn.ReadMessage()

if err != nil {

log.Println("Failed to read prompt from WebSocket:", err)

conn.WriteMessage(websocket.TextMessage, []byte("Failed to read prompt"))

return

}

// 构建请求

llamaRequest := LLaMARequest{

Model: "llama3:8b",

Prompt: string(promptMsg),

Stream: true,

}

// 调用 LLaMA 并流式发送数据

if err := streamToWebSocket(conn, llamaRequest); err != nil {

log.Println("Error streaming to WebSocket:", err)

return

}

})

// 启动服务器

r.Run(":8080")

}

// streamToWebSocket streams LLaMA data to the WebSocket

func streamToWebSocket(conn *websocket.Conn, request LLaMARequest) error {

// 准备请求数据

jsonData, err := json.Marshal(request)

if err != nil {

return err

}

// 设置 HTTP 客户端超时时间, 300s 足够回答完问题

httpClient := &http.Client{

Timeout: 300 * time.Second,

}

// 调用 LLaMA API,模型的ip,服务端如和大模型部署在一起,可以用127.0.0.1

resp, err := httpClient.Post("http://xx.xx.xx.xx:11434/api/generate", "application/json", bytes.NewBuffer(jsonData))

if err != nil {

return err

}

defer resp.Body.Close()

// 逐行读取响应数据

scanner := bufio.NewScanner(resp.Body)

for scanner.Scan() {

line := scanner.Text()

// 解析每行 JSON 数据

var chunk struct {

Response string `json:"response"`

Done bool `json:"done"`

}

if err := json.Unmarshal([]byte(line), &chunk); err != nil {

log.Printf("Failed to parse chunk: %s, error: %v", line, err)

continue

}

// 按单词发送到前端,服务端收到信息,发送到前端(s->c),websocket为双向通信,SSE单向通信

for _, word := range strings.Fields(chunk.Response) {

conn.SetWriteDeadline(time.Now().Add(10 * time.Second)) // 10s内写一个response 足够了

if err := conn.WriteMessage(websocket.TextMessage, []byte(word+" ")); err != nil {

return err

}

}

// 如果 done 标志为 true,发送完成信号

if chunk.Done {

conn.WriteMessage(websocket.TextMessage, []byte("[DONE]"))

break

}

}

if err := scanner.Err(); err != nil {

return err

}

return nil

}

2、客户端代码如下:

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>WebSocket Streaming</title>

<style>

body {

font-family: Arial, sans-serif;

margin: 20px;

}

#output {

font-size: 16px;

white-space: pre-wrap;

border: 1px solid #ccc;

border-radius: 5px;

padding: 10px;

background-color: #f9f9f9;

height: 300px;

overflow-y: auto;

}

#prompt {

width: 100%;

padding: 10px;

margin-top: 10px;

font-size: 16px;

border: 1px solid #ccc;

border-radius: 5px;

}

#send {

padding: 10px 20px;

font-size: 16px;

margin-top: 10px;

cursor: pointer;

background-color: #007bff;

color: white;

border: none;

border-radius: 5px;

}

</style>

</head>

<body>

<h1>WebSocket Streaming</h1>

<textarea id="prompt" placeholder="Enter your prompt here"></textarea>

<button id="send">Send</button>

<div id="output"></div>

<script>

const sendButton = document.getElementById('send');

const promptInput = document.getElementById('prompt');

const outputDiv = document.getElementById('output');

let socket;

sendButton.addEventListener('click', () => {

const prompt = promptInput.value.trim();

if (!prompt) {

alert('Please enter a prompt!');

return;

}

// 建立新的 WebSocket 连接

socket = new WebSocket('ws://localhost:8080/ws');

socket.onopen = () => {

console.log('WebSocket connection established');

outputDiv.textContent = ''; // 清空之前的内容

socket.send(prompt); // 发送 Prompt

};

socket.onmessage = (event) => {

if (event.data === '[DONE]') {

console.log('Streaming complete');

socket.close();

return;

}

// 动态追加到输出框

outputDiv.textContent += event.data;

outputDiv.scrollTop = outputDiv.scrollHeight; // 滚动到底部

};

socket.onerror = (error) => {

console.error('WebSocket error:', error);

};

socket.onclose = () => {

console.log('WebSocket connection closed');

};

});

</script>

</body>

</html>

3、调用示例(远程)